Appearance

Pandas 速查表

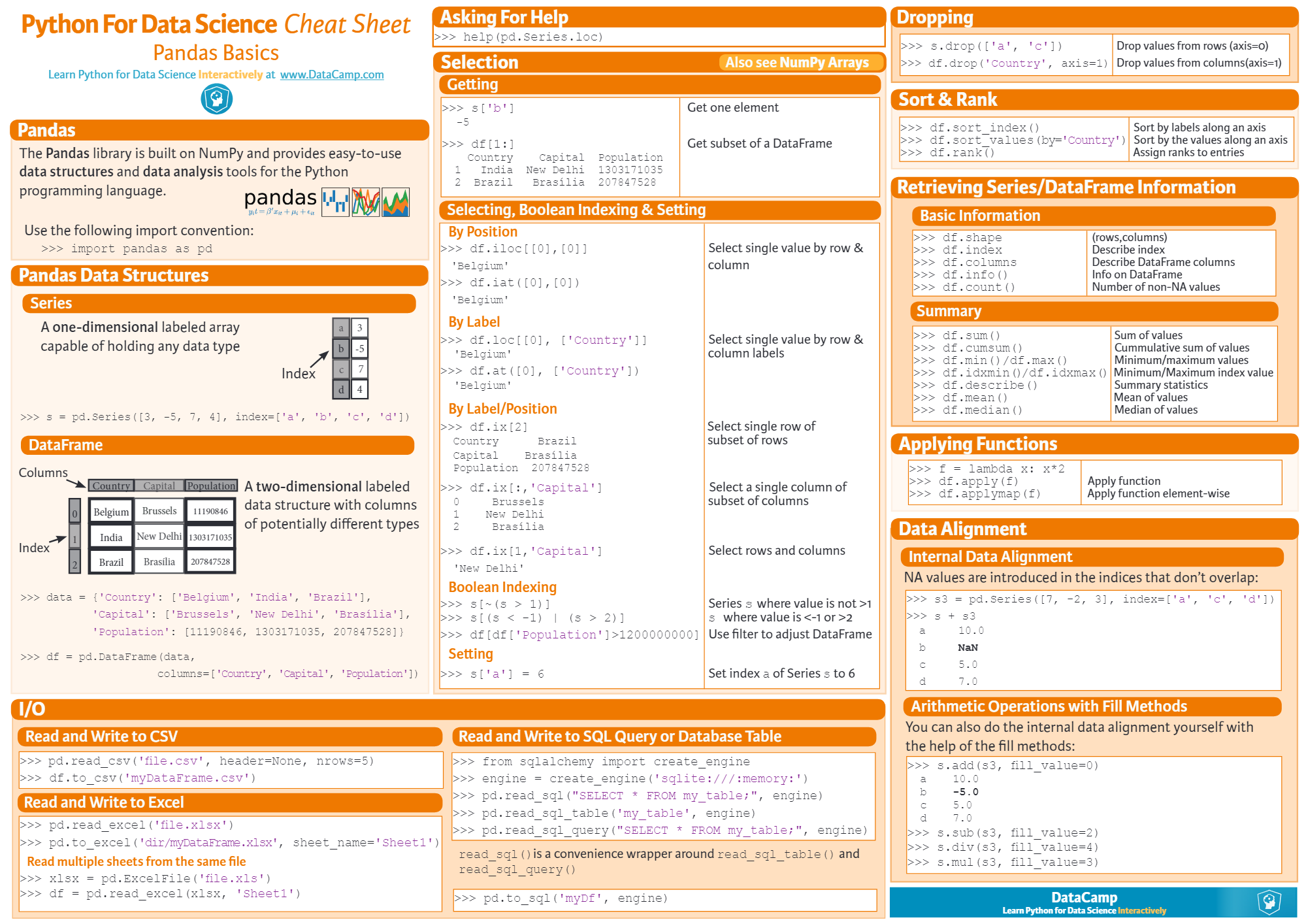

Pandas

Data analysis library. For examples see Plotly.

python

# $ pip3 install pandas matplotlib

import pandas as pd, matplotlib.pyplot as pltSeries

Ordered dictionary with a name.

python

>>> s = pd.Series([1, 2], index=['x', 'y'], name='a'); s

x 1

y 2

Name: a, dtype: int64python

<S> = pd.Series(<list>) # Uses list's indices for 'index'.

<S> = pd.Series(<dict>) # Uses dictionary's keys for 'index'.python

<el> = <S>.loc[key] # Or: <S>.iloc[i]

<S> = <S>.loc[coll_of_keys] # Or: <S>.iloc[coll_of_i]

<S> = <S>.loc[from_key : to_key_inc] # Or: <S>.iloc[from_i : to_i_exc]python

<el> = <S>[key/i] # Or: <S>.<key>

<S> = <S>[coll_of_keys/coll_of_i] # Or: <S>[key/i : key/i]

<S> = <S>[bools] # Or: <S>.loc/iloc[bools]python

<S> = <S> > <el/S> # Returns S of bools. Pairs items by keys.

<S> = <S> + <el/S> # Items with non-matching keys get value NaN.python

<S> = pd.concat(<coll_of_S>) # Concats multiple series into one long Series.

<S> = <S>.combine_first(<S>) # Adds items that are not yet present.

<S>.update(<S>) # Updates items that are already present.python

<S>.plot.line/area/bar/pie/hist() # Generates a plot. `plt.show()` displays it.- Indexing objects can't be tuples because

'obj[x, y]'is converted to'obj[(x, y)]'. - Pandas uses NumPy types like

'np.int64'. Series is converted to'float64'if we assign np.nan to any item. Use'<S>.astype(<str/type>)'to get converted Series. - Series will silently overflow if we run

'pd.Series([100], dtype="int8") + 100'!

Series — Aggregate, Transform, Map:

python

<el> = <S>.sum/max/mean/idxmax/all() # Or: <S>.agg(lambda <S>: <el>)

<S> = <S>.rank/diff/cumsum/ffill/interpol…() # Or: <S>.agg/transform(lambda <S>: <S>)

<S> = <S>.isna/fillna/isin([<el/coll>]) # Or: <S>.agg/transform/map(lambda <el>: <el>)text

+--------------+-------------+-------------+---------------+

| | 'sum' | ['sum'] | {'s': 'sum'} |

+--------------+-------------+-------------+---------------+

| s.apply(…) | 3 | sum 3 | s 3 |

| s.agg(…) | | | |

+--------------+-------------+-------------+---------------+text

+--------------+-------------+-------------+---------------+

| | 'rank' | ['rank'] | {'r': 'rank'} |

+--------------+-------------+-------------+---------------+

| s.apply(…) | | rank | |

| s.agg(…) | x 1.0 | x 1.0 | r x 1.0 |

| | y 2.0 | y 2.0 | y 2.0 |

+--------------+-------------+-------------+---------------+- Methods ffill(), interpolate(), fillna() and dropna() accept

'inplace=True'. - Agg() and transform() pass a Series to a function if it raises Type/Val/AttrError on a scalar.

- Last result has a multi-index. Use

'<S>[key_1, key_2]'to get its values.

DataFrame

Table with labeled rows and columns.

python

>>> df = pd.DataFrame([[1, 2], [3, 4]], index=['a', 'b'], columns=['x', 'y']); df

x y

a 1 2

b 3 4python

<DF> = pd.DataFrame(<list_of_rows>) # Rows can be either lists, dicts or series.

<DF> = pd.DataFrame(<dict_of_columns>) # Columns can be either lists, dicts or series.python

<el> = <DF>.loc[row_key, col_key] # Or: <DF>.iloc[row_i, col_i]

<S/DF> = <DF>.loc[row_key/s] # Or: <DF>.iloc[row_i/s]

<S/DF> = <DF>.loc[:, col_key/s] # Or: <DF>.iloc[:, col_i/s]

<DF> = <DF>.loc[row_bools, col_bools] # Or: <DF>.iloc[row_bools, col_bools]python

<S/DF> = <DF>[col_key/s] # Or: <DF>.<col_key>

<DF> = <DF>[<S_of_bools>] # Filters rows. For example `df[df.x > 1]`.

<DF> = <DF>[<DF_of_bools>] # Assigns NaN to items that are False in bools.python

<DF> = <DF> > <el/S/DF> # Returns DF of bools. S is treated as a row.

<DF> = <DF> + <el/S/DF> # Items with non-matching keys get value NaN.python

<DF> = <DF>.set_index(col_key) # Replaces row keys with column's values.

<DF> = <DF>.reset_index(drop=False) # Drops or moves row keys to column named index.

<DF> = <DF>.sort_index(ascending=True) # Sorts rows by row keys. Use `axis=1` for cols.

<DF> = <DF>.sort_values(col_key/s) # Sorts rows by passed column/s. Also `axis=1`.python

<DF> = <DF>.head/tail/sample(<int>) # Returns first, last, or random n rows.

<DF> = <DF>.describe() # Describes columns. Also info(), corr(), shape.

<DF> = <DF>.query('<query>') # Filters rows. For example `df.query('x > 1')`.python

<DF>.plot.line/area/bar/scatter(x=col_key, …) # `y=col_key/s`. Also hist/box(by=col_key).

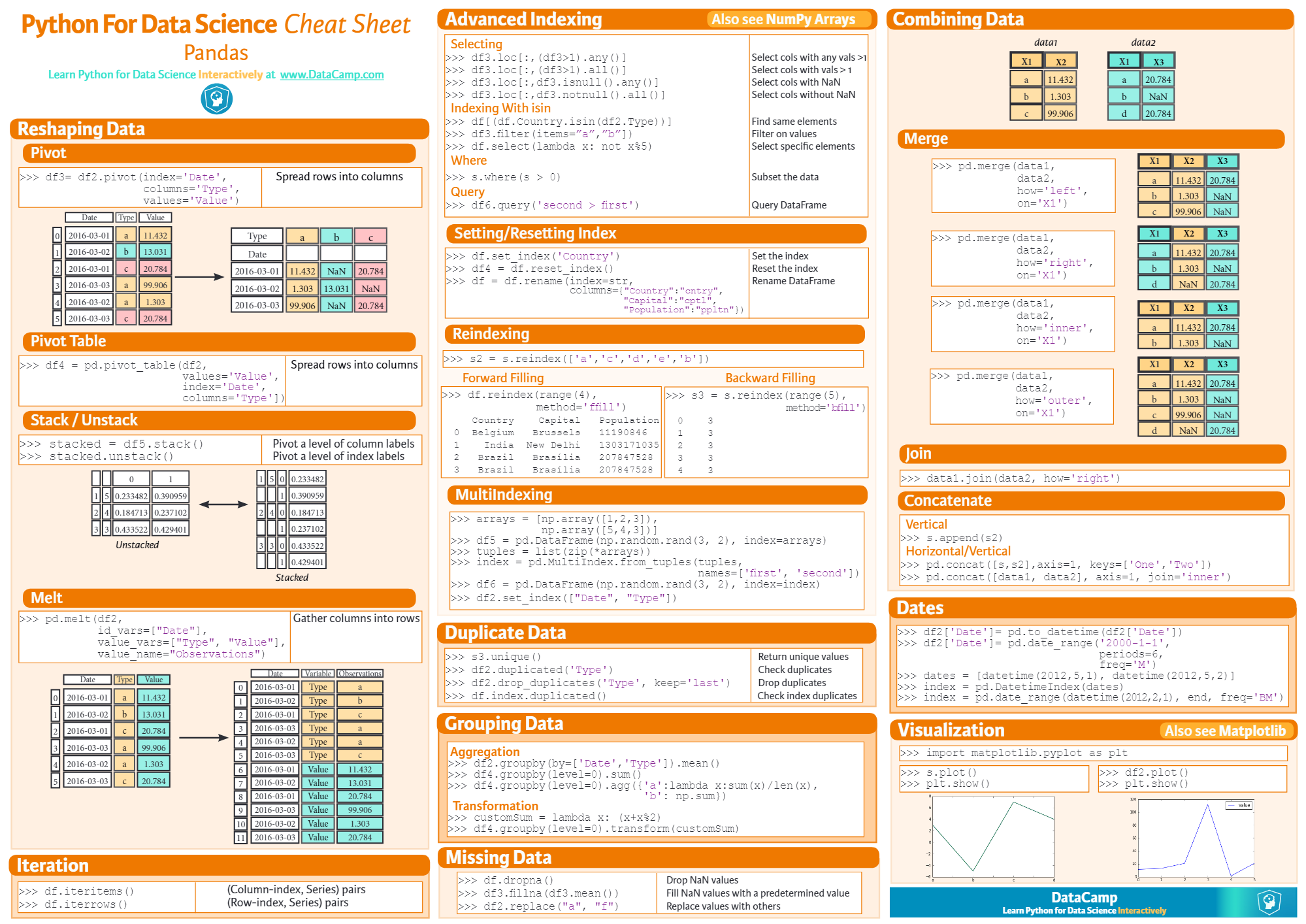

plt.show() # Displays the plot. Also plt.savefig(<path>).DataFrame — Merge, Join, Concat:

python

>>> df_2 = pd.DataFrame([[4, 5], [6, 7]], index=['b', 'c'], columns=['y', 'z']); df_2

y z

b 4 5

c 6 7text

+-----------------------+---------------+------------+------------+---------------------------+

| | 'outer' | 'inner' | 'left' | Description |

+-----------------------+---------------+------------+------------+---------------------------+

| df.merge(df_2, | x y z | x y z | x y z | Merges on column if 'on' |

| on='y', | 0 1 2 . | 3 4 5 | 1 2 . | or 'left_on/right_on' are |

| how=…) | 1 3 4 5 | | 3 4 5 | set, else on shared cols. |

| | 2 . 6 7 | | | Uses 'inner' by default. |

+-----------------------+---------------+------------+------------+---------------------------+

| df.join(df_2, | x yl yr z | | x yl yr z | Merges on row keys. |

| lsuffix='l', | a 1 2 . . | x yl yr z | 1 2 . . | Uses 'left' by default. |

| rsuffix='r', | b 3 4 4 5 | 3 4 4 5 | 3 4 4 5 | If Series is passed, it |

| how=…) | c . . 6 7 | | | is treated as a column. |

+-----------------------+---------------+------------+------------+---------------------------+

| pd.concat([df, df_2], | x y z | y | | Adds rows at the bottom. |

| axis=0, | a 1 2 . | 2 | | Uses 'outer' by default. |

| join=…) | b 3 4 . | 4 | | A Series is treated as a |

| | b . 4 5 | 4 | | column. To add a row use |

| | c . 6 7 | 6 | | pd.concat([df, DF([s])]). |

+-----------------------+---------------+------------+------------+---------------------------+

| pd.concat([df, df_2], | x y y z | | | Adds columns at the |

| axis=1, | a 1 2 . . | x y y z | | right end. Uses 'outer' |

| join=…) | b 3 4 4 5 | 3 4 4 5 | | by default. A Series is |

| | c . . 6 7 | | | treated as a column. |

+-----------------------+---------------+------------+------------+---------------------------+DataFrame — Aggregate, Transform, Map:

python

<S> = <DF>.sum/max/mean/idxmax/all() # Or: <DF>.apply/agg(lambda <S>: <el>)

<DF> = <DF>.rank/diff/cumsum/ffill/interpo…() # Or: <DF>.apply/agg/transform(lambda <S>: <S>)

<DF> = <DF>.isna/fillna/isin([<el/coll>]) # Or: <DF>.applymap(lambda <el>: <el>)text

+-----------------+---------------+---------------+---------------+

| | 'sum' | ['sum'] | {'x': 'sum'} |

+-----------------+---------------+---------------+---------------+

| df.apply(…) | x 4 | x y | x 4 |

| df.agg(…) | y 6 | sum 4 6 | |

+-----------------+---------------+---------------+---------------+text

+-----------------+---------------+---------------+---------------+

| | 'rank' | ['rank'] | {'x': 'rank'} |

+-----------------+---------------+---------------+---------------+

| df.apply(…) | | x y | |

| df.agg(…) | x y | rank rank | x |

| df.transform(…) | a 1.0 1.0 | a 1.0 1.0 | a 1.0 |

| | b 2.0 2.0 | b 2.0 2.0 | b 2.0 |

+-----------------+---------------+---------------+---------------+- All methods operate on columns by default. Pass

'axis=1'to process the rows instead. - Fifth result's columns are indexed with a multi-index. This means we need a tuple of column keys to specify a column:

'<DF>.loc[row_key, (col_key_1, col_key_2)]'.

DataFrame — Multi-Index:

python

<DF> = <DF>.xs(key, level=<int>) # Rows with key on passed level of multi-index.

<DF> = <DF>.xs(keys, level=<ints>, axis=1) # Cols that have first key on first level, etc.

<DF> = <DF>.set_index(col_keys) # Combines multiple columns into a multi-index.

<S/DF> = <DF>.stack/unstack(level=-1) # Combines col keys with row keys or vice versa.

<DF> = <DF>.pivot_table(index=col_key/s) # `columns=key/s, values=key/s, aggfunc='mean'`.DataFrame — Encode, Decode:

python

<DF> = pd.read_json/pickle(<path/url/file>) # Also accepts io.StringIO/BytesIO(<str/bytes>).

<DF> = pd.read_csv(<path/url/file>) # `header/index_col/dtype/usecols/…=<obj>`.

<DF> = pd.read_excel(<path/url/file>) # `sheet_name=None` returns dict of all sheets.

<DF> = pd.read_sql('<table/query>', <conn>) # SQLite3/SQLAlchemy connection (see #SQLite).

<list> = pd.read_html(<path/url/file>) # Run `$ pip3 install beautifulsoup4 lxml`.python

<dict> = <DF>.to_dict('d/l/s/…') # Returns columns as dicts, lists or series.

<str> = <DF>.to_json/csv/html/latex() # Saves output to a file if path is passed.

<DF>.to_pickle/excel(<path>) # Run `$ pip3 install "pandas[excel]" odfpy`.

<DF>.to_sql('<table_name>', <connection>) # Also `if_exists='fail/replace/append'`.- Read_csv() only parses dates of columns that were specified by 'parse_dates' argument. It automatically tries to detect the format, but it can be helped with 'date_format' or 'datefirst' arguments. Both dates and datetimes get stored as pd.Timestamp objects.

- If there's a single invalid date then it returns the whole column as a series of strings, unlike

'<S> = pd.to_datetime(<S>, errors="coerce")', which uses pd.NaT. - To get specific attributes from a series of Timestamps use

'<S>.dt.year/date/…'.

GroupBy

Object that groups together rows of a dataframe based on the value of the passed column.

python

<GB> = <DF>.groupby(col_key/s) # Splits DF into groups based on passed column.

<DF> = <GB>.apply/filter(<func>) # Filter drops a group if func returns False.

<DF> = <GB>.get_group(<el>) # Selects a group by grouping column's value.

<S> = <GB>.size() # S of group sizes. Same keys as get_group().

<GB> = <GB>[col_key] # Single column GB. All operations return S.python

<DF> = <GB>.sum/max/mean/idxmax/all() # Or: <GB>.agg(lambda <S>: <el>)

<DF> = <GB>.rank/diff/cumsum/ffill() # Or: <GB>.transform(lambda <S>: <S>)

<DF> = <GB>.fillna(<el>) # Or: <GB>.transform(lambda <S>: <S>)Divides rows into groups and sums their columns. Result has a named index that creates column 'z' on reset_index():

python

>>> df = pd.DataFrame([[1, 2, 3], [4, 5, 6], [7, 8, 6]], list('abc'), list('xyz'))

>>> gb = df.groupby('z'); gb.apply(print)

x y z

a 1 2 3

x y z

b 4 5 6

c 7 8 6

>>> gb.sum()

x y

z

3 1 2

6 11 13Rolling

Object for rolling window calculations.

python

<RS/RDF/RGB> = <S/DF/GB>.rolling(win_size) # Also: `min_periods=None, center=False`.

<RS/RDF/RGB> = <RDF/RGB>[col_key/s] # Or: <RDF/RGB>.<col_key>

<S/DF> = <R>.mean/sum/max() # Or: <R>.apply/agg(<agg_func/str>)